Capsules

Geoffrey Hinton. Dynamic Routing Between Capsules. 2017.10.26

任星彰 xzhren@pku.edu.cn

Menu

• Capsule vs. Neuron

• Dynamic Routing vs. Attention

• CapsNet vs. Fully Connect CNN

• Reconstruction

• Code

• Discussion

Menu

• Capsule vs. Neuron

• Dynamic Routing vs. Attention

• CapsNet vs. Fully Connect CNN

• Reconstruction

• Code

• Discussion

Neuron

Capsule

Neuron -> Capsule

Capsule

• Capsule:

• 用复杂的 Capsule 替代现在神经网络每层中简单的Neuron

• 区别在于:scale in, scale out -> vector in, vector out

• 使用向量作为输入输出,而向量就可以作为良好的表征

• 比如word2vec中的向量就可以良好表征词汇

• 向量表示一个

实体

具备不同

属性

,称为:活动向量(activity vector)

• 其长度表征了某个实例(物体,视觉概念或者它们的一部分)出现的概率

• 其方向(长度无关部分)表征了物体的某些图形属性(位置,颜色,方向,形状等)

• 活动向量的设计为Capsule的计算带来了新的挑战

Capsules make a very strong representational assumption: At each location in the image, there is at most one

instance of the type of entity that a capsule represents. This assumption, which was motivated by the

perceptual phenomenon called “crowding” (Pelli et al. [2004]) --- Hinton

1

ˆ

ˆ

ˆ

33

22

11

3

2

1

+

¾®¾

¾®¾

¾®¾

uu

uu

uu

j

j

j

w

w

w

2

c

3

c

j

u

)(×squash

å

1

c

Input from low-level

neuron/capsule

vector ( )

scalar( )

Operation

Affine

Transformation

—

Weighting

Sum

Non-linearity

activation fun

output vector( ) scalar( )

iijj|i

uWu =

ˆ

å

=

i

j|iijj

ucs

ˆ

j

j

j

j

j

s

s

s

s

v

2

1 +

=

2

i

v

bxWa

i

iij

+=

å

=

3

1

)()(

, jbw

afxh =

h

i

x

1

u

(Eq. 1)

(Eq. 2)

(Eq. 2)

capsule vs. traditional neuron

Capsule

∑

)f( ×

1

3

2

1

+

x

x

x

b

w

w

w

3

2

1

)(

,

xh

bw

:)f( ×

sigmoid, tanh, ReLU, etc.

vector in, vector out VS. scalar in, scalar out

Capsule Input

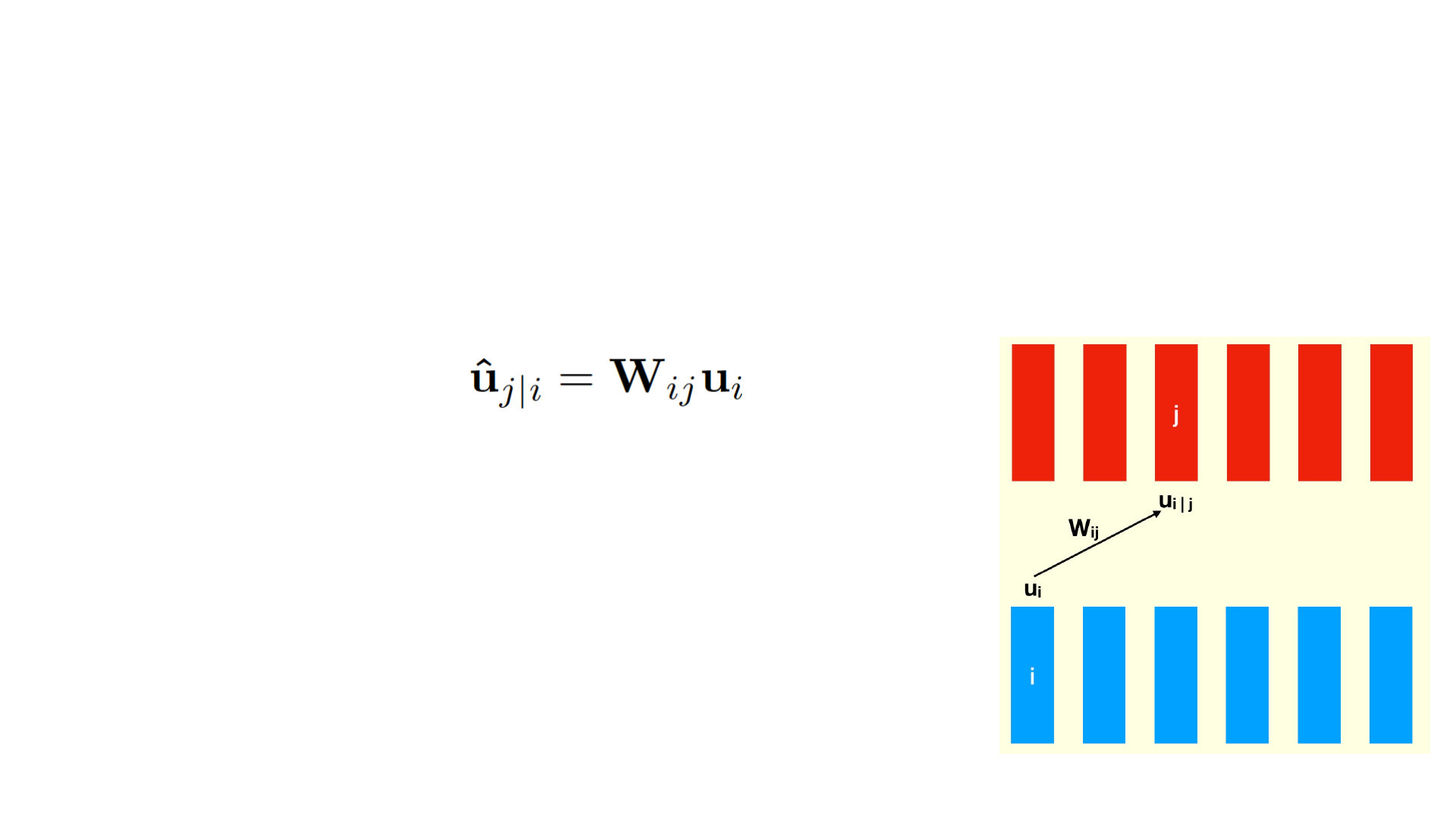

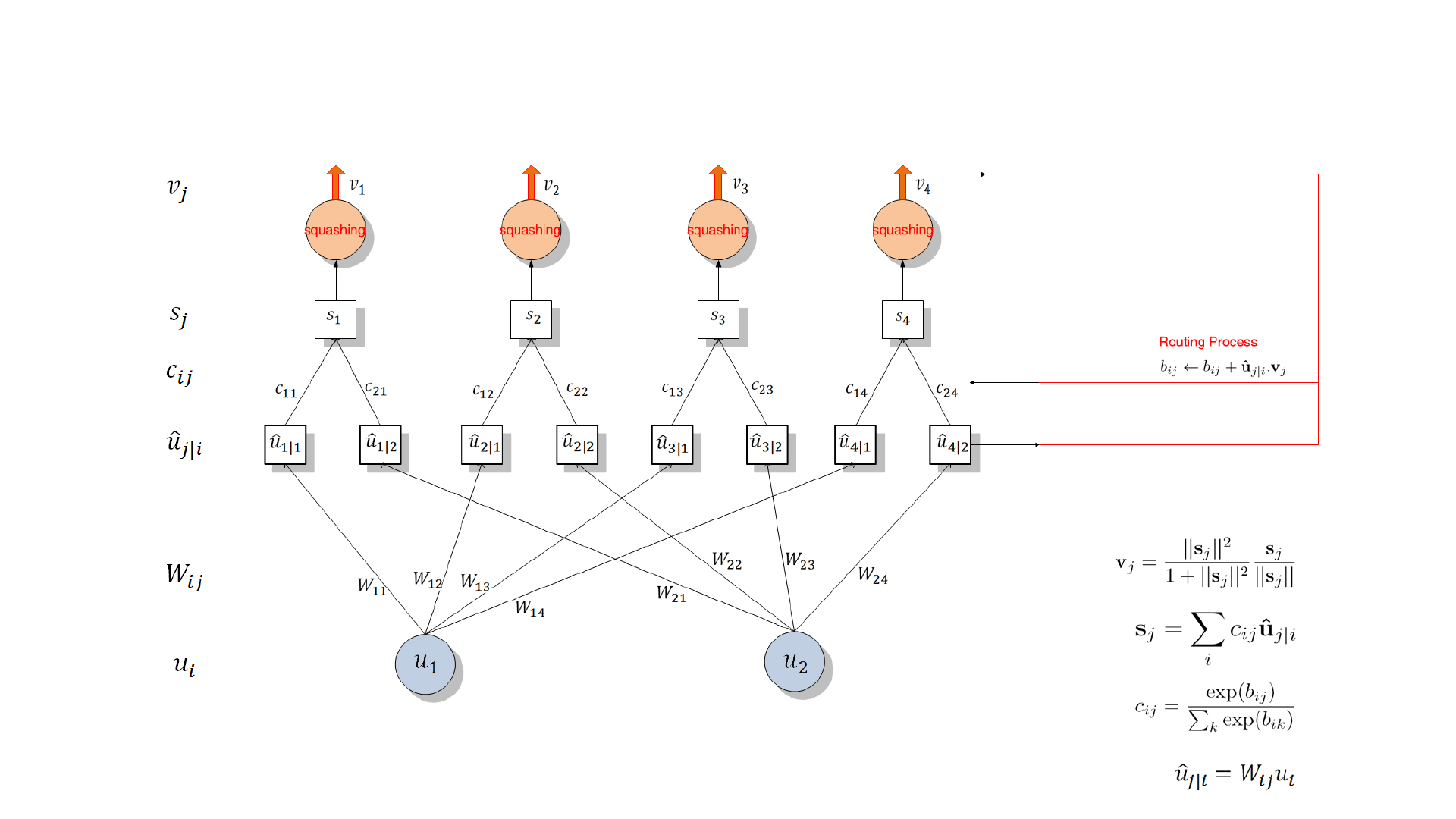

• Capsule 处理输入分为两个阶段:线性组合和routing

• 线性组合:

• 相当于原来的权重从常量变成了矩阵

Capsule vector computed

其中u是下层的向量,由前层的标号为i的capsule

产生,带帽子的u是处理后的结果,送给上层的标

号为j的capsule

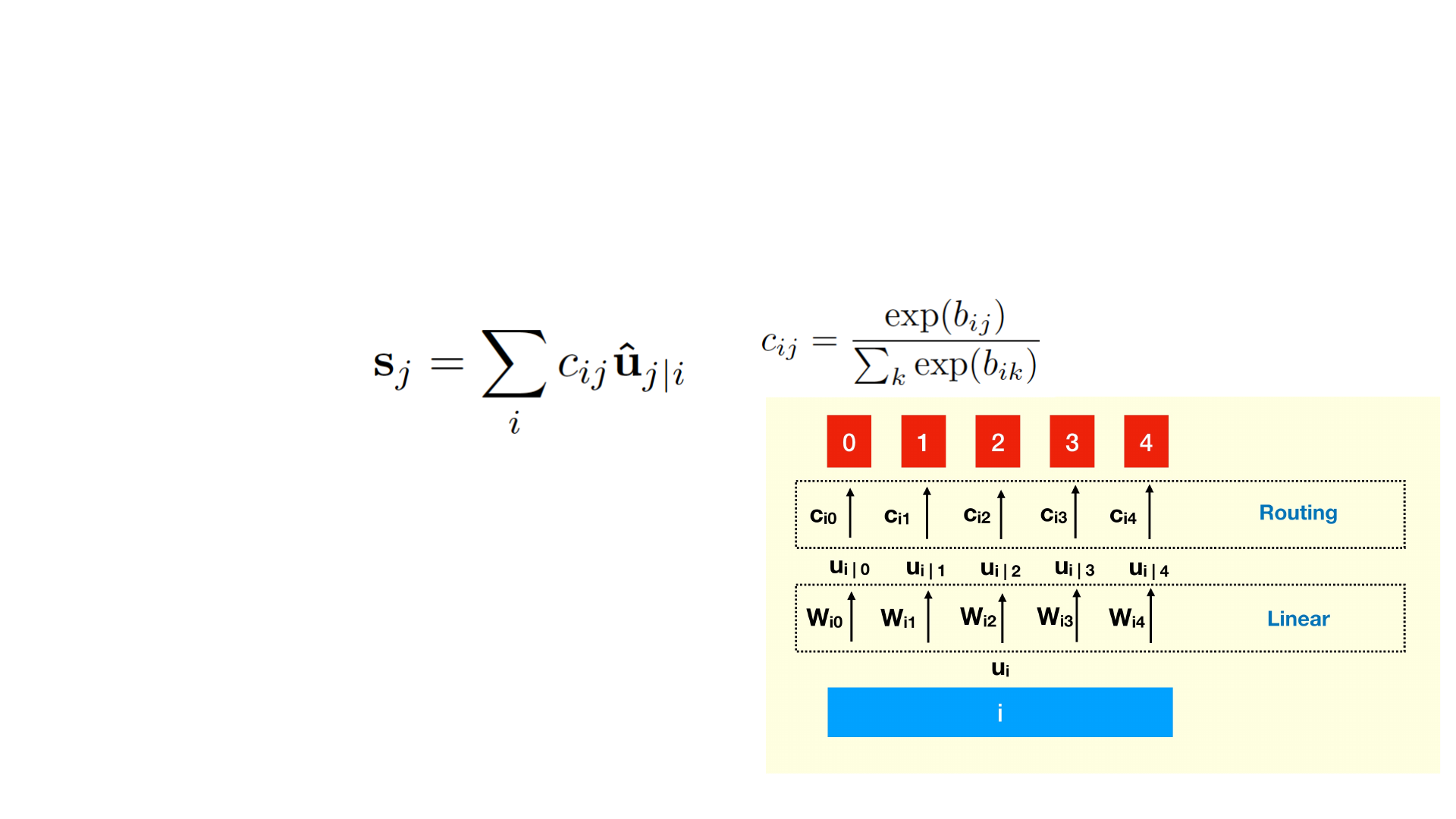

Capsule Input

• Capsule 处理输入分为两个阶段:线性组合和routing

• Routing:

• 相当于对uj|i进行cij的加权求和

Capsule vector computed

cij是bij softmax 的结果,从而使得cij分布归一化

由于softmax会使分布“尖锐化”,从而只有少数cij有较

大的取值,这样就起到了routing的作用

(只有少数uij的权重较大,就好像底层的某个capsule

的输出只贡献给上面的某个capsule)

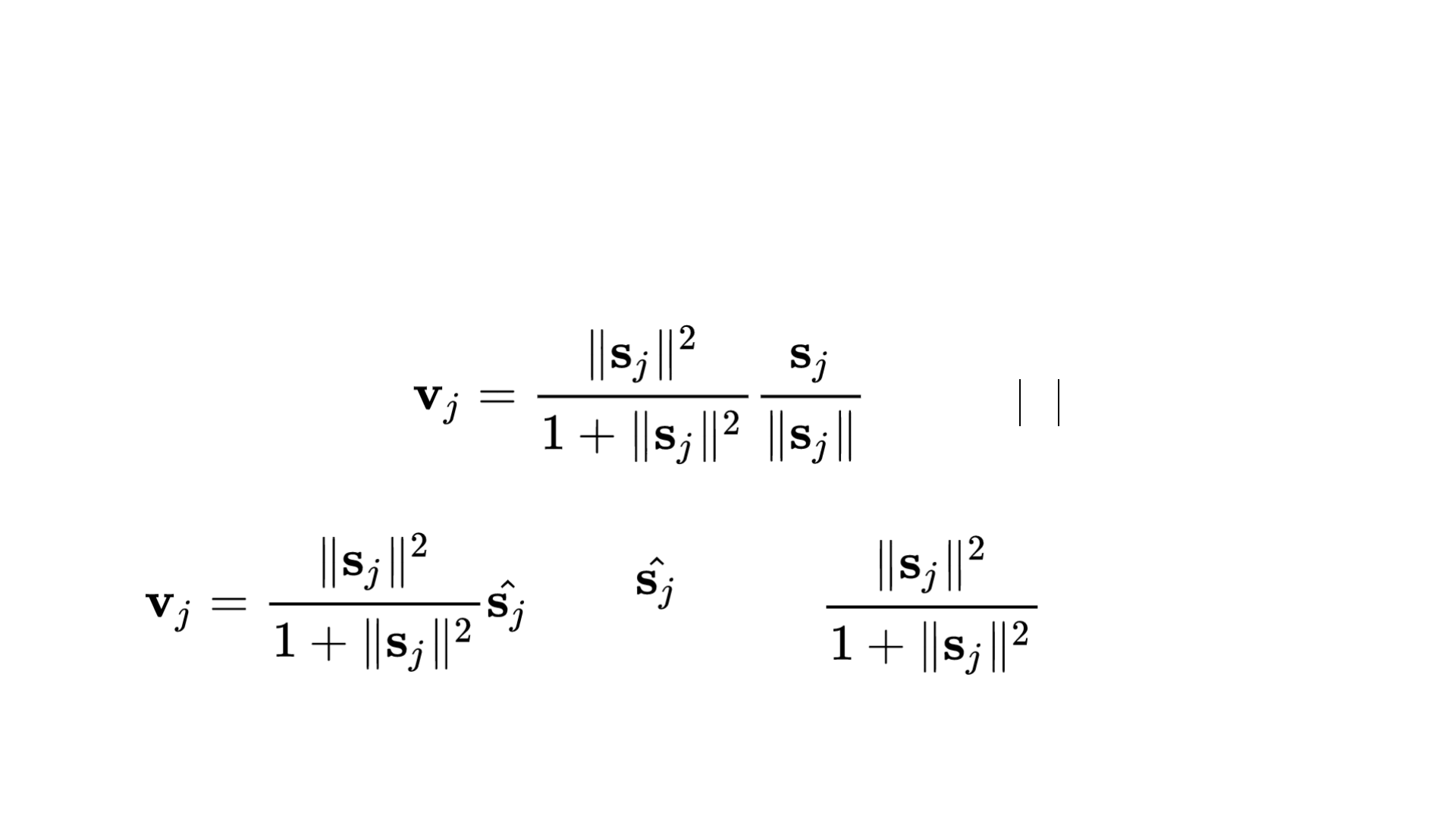

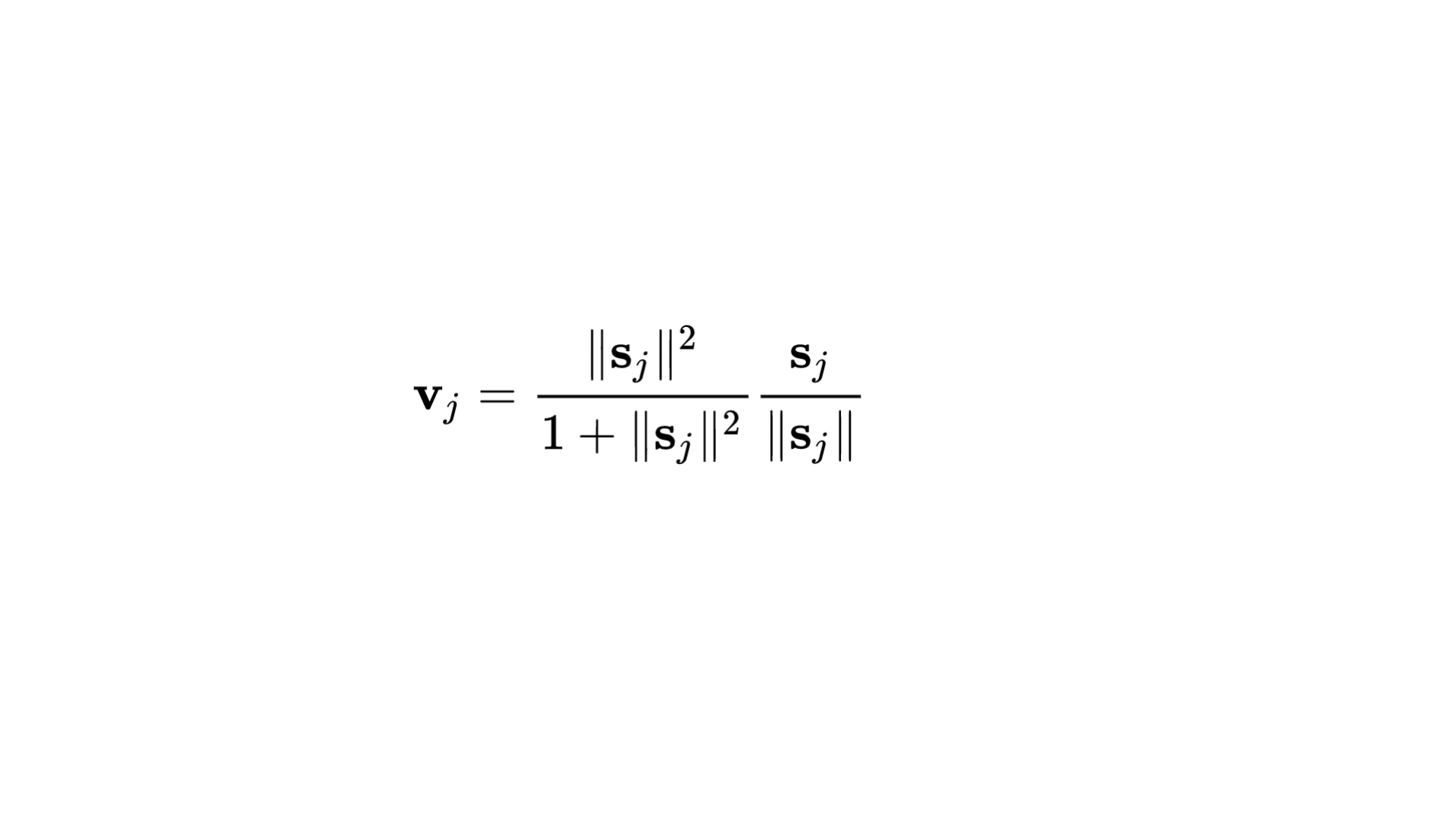

Capsule activation function

• Squashing:非线性函数(s为输入,v为输出,j为capsule的序号)

Capsule vector computed

单位化向量

缩放向量长度

[ ]

10,v

j

Î

Capsule activation function

• Squashing:非线性函数(s为输入,v为输出,j为capsule的序号)

Capsule vector computed

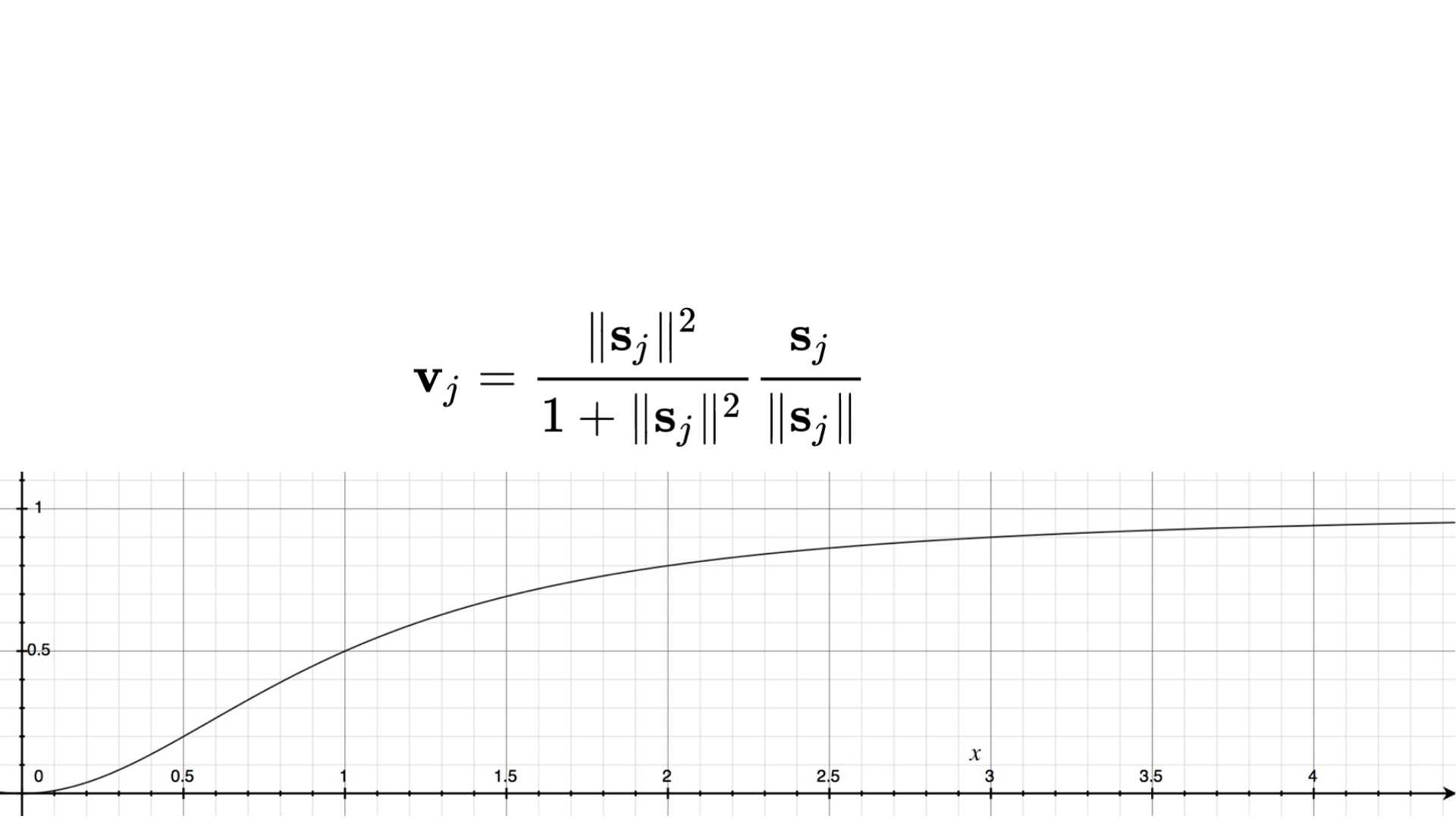

Capsule activation function

• Squashing:非线性函数(s为输入,v为输出,j为capsule的序号)

• 这个函数的特点是:

• 值域在[0,1]之间,所以输出向量的长度可以表征某种概率。

• 函数单调增,所以“鼓励”原来较长的向量,而“压缩”原来较小的向量。

也就是 Capsule 的“激活函数” 实际上是对向量长度的一种压缩和重新分布。

Capsule vector computed

Menu

• Capsule vs. Neuron

• Dynamic Routing vs. Attention

• CapsNet vs. Fully Connect CNN

• Reconstruction

• Code

• Discussion

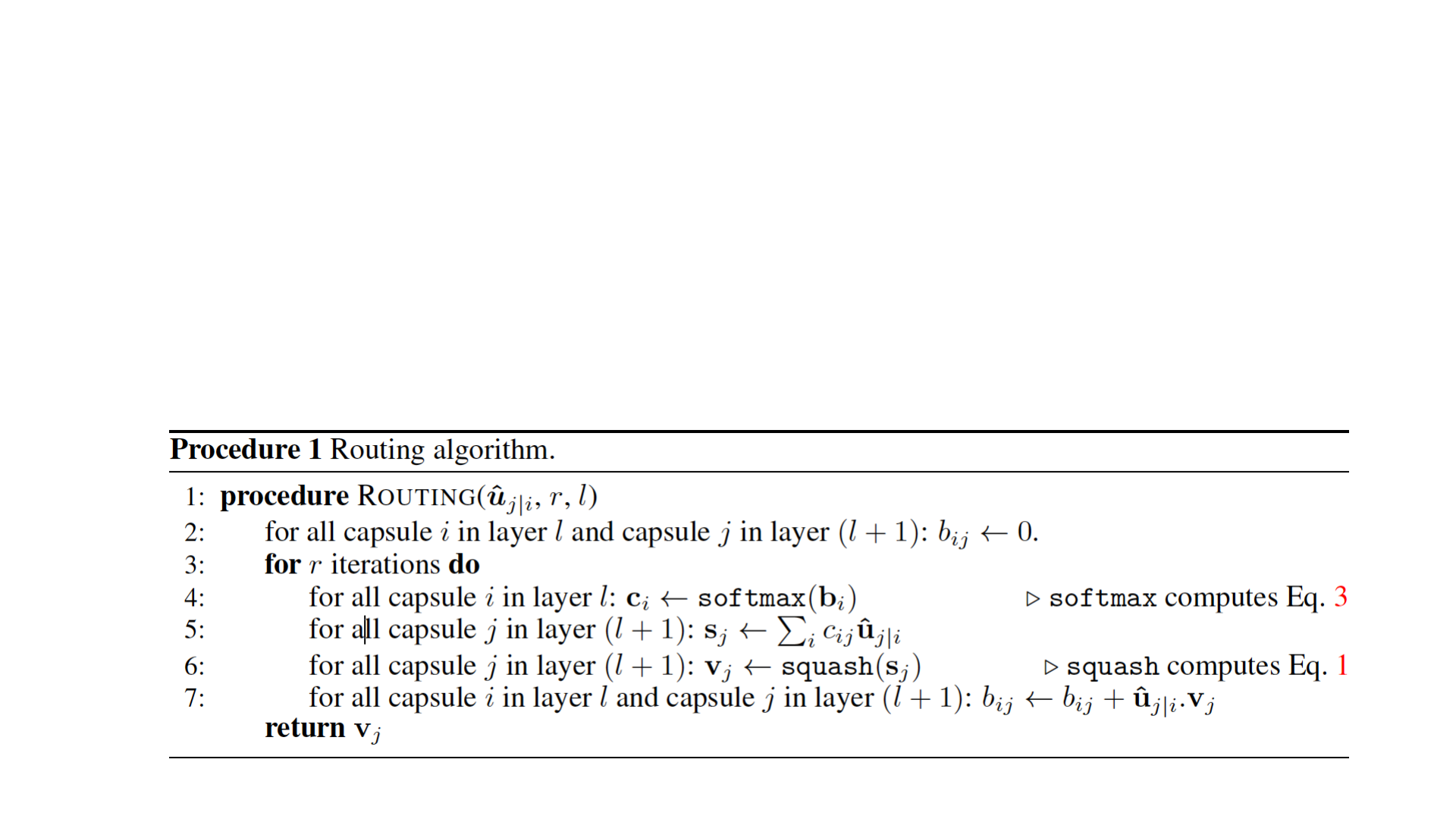

Routing algorithm

Dynamic Routing

• 输入:capsule j感受野下的所有capsule i的输出向量

• 输出:capsule j

• 参数:r:迭代次数、l:当前层数

j

v

iijj|i

uWu =

ˆ

Routing algorithm

Dynamic Routing

实质:更新bij

这个更新算法很容易收敛。论文中认为3次足

矣。routing 和其他算法一样也有过拟合的问

题,虽然增加routing的迭代次数可以提高准

确率,但是会增加泛化误差,所以不宜过多

迭代。

Routing algorithm

Dynamic Routing

Menu

• Capsule vs. Neuron

• Dynamic Routing vs. Attention

• CapsNet vs. Fully Connect CNN

• Reconstruction

• Code

• Discussion

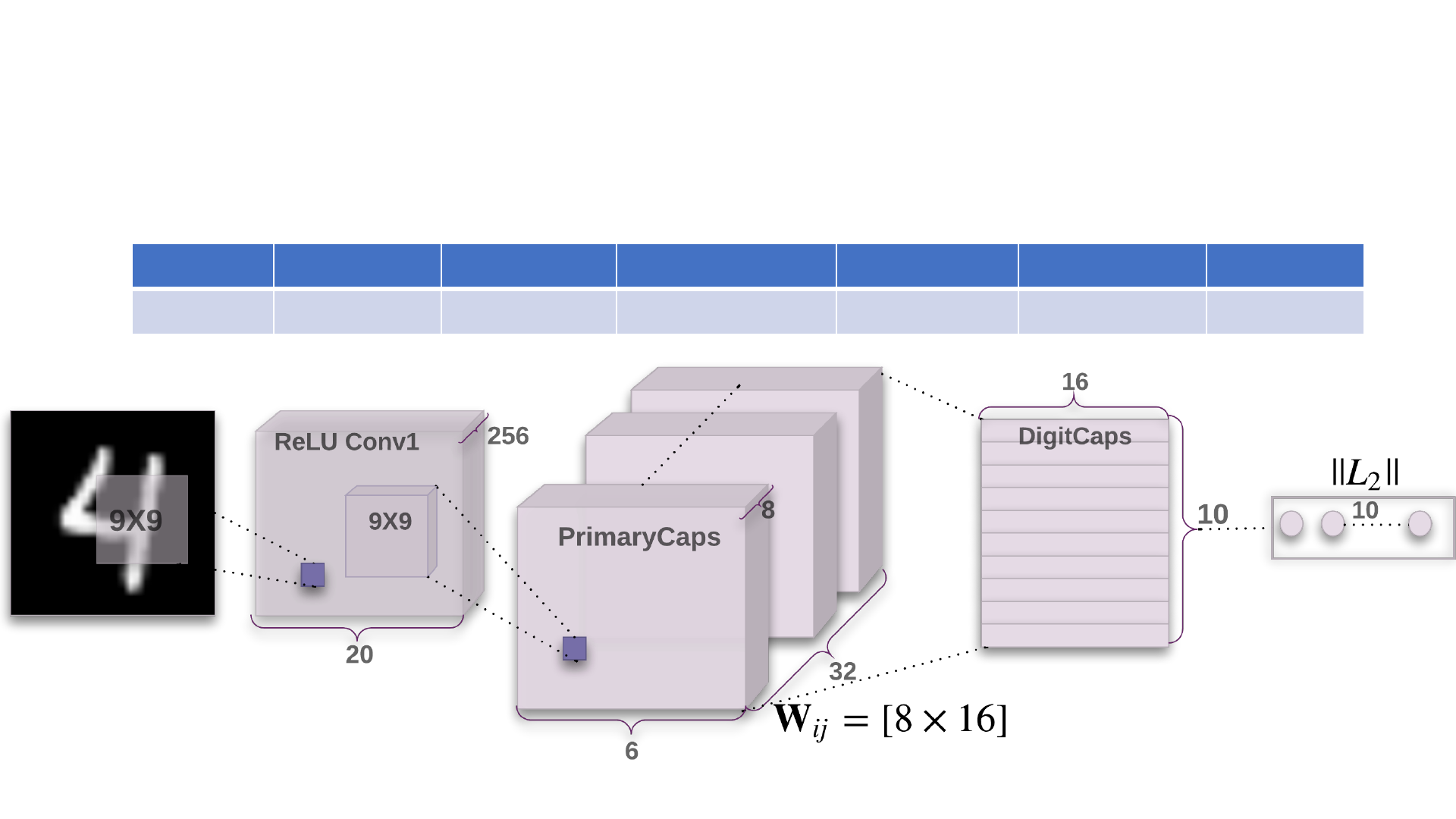

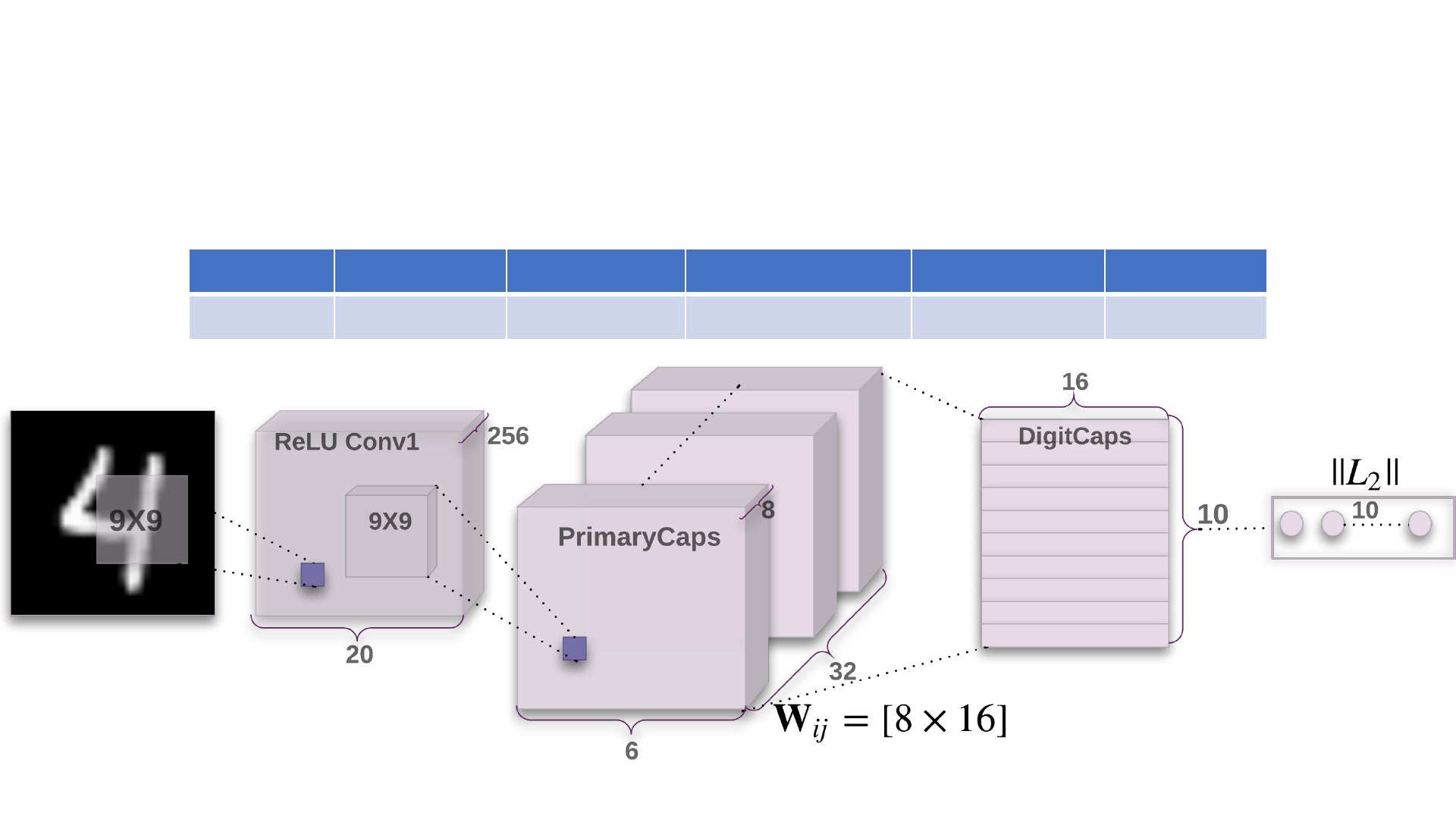

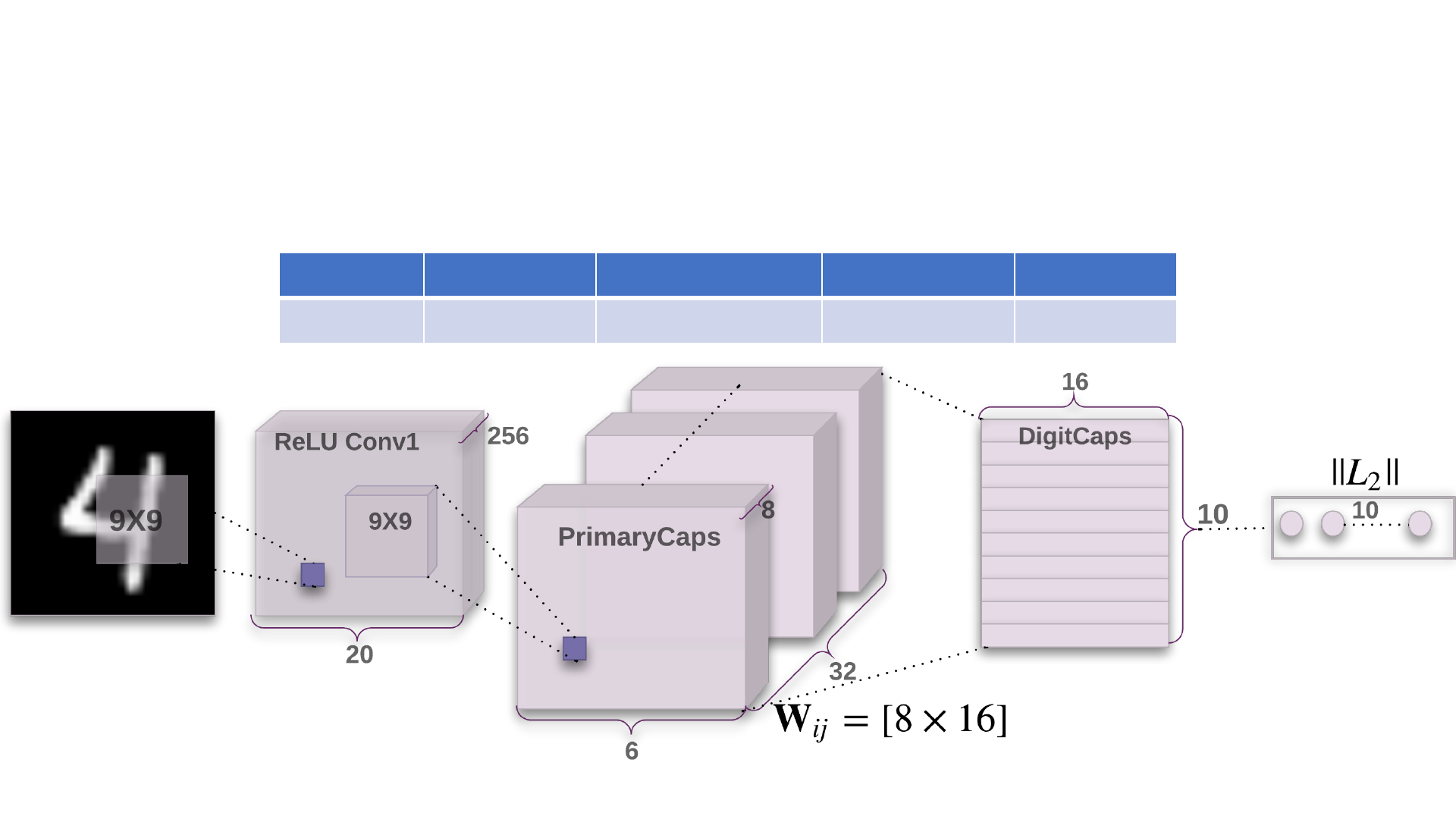

CapsNet

• CapsNet:CNN + Capsule

• CNN 卷积层:相当于视网膜

• Input:28x28

• 256 kernels, 9x9 filter size, strides 1

• Output:20x20x256

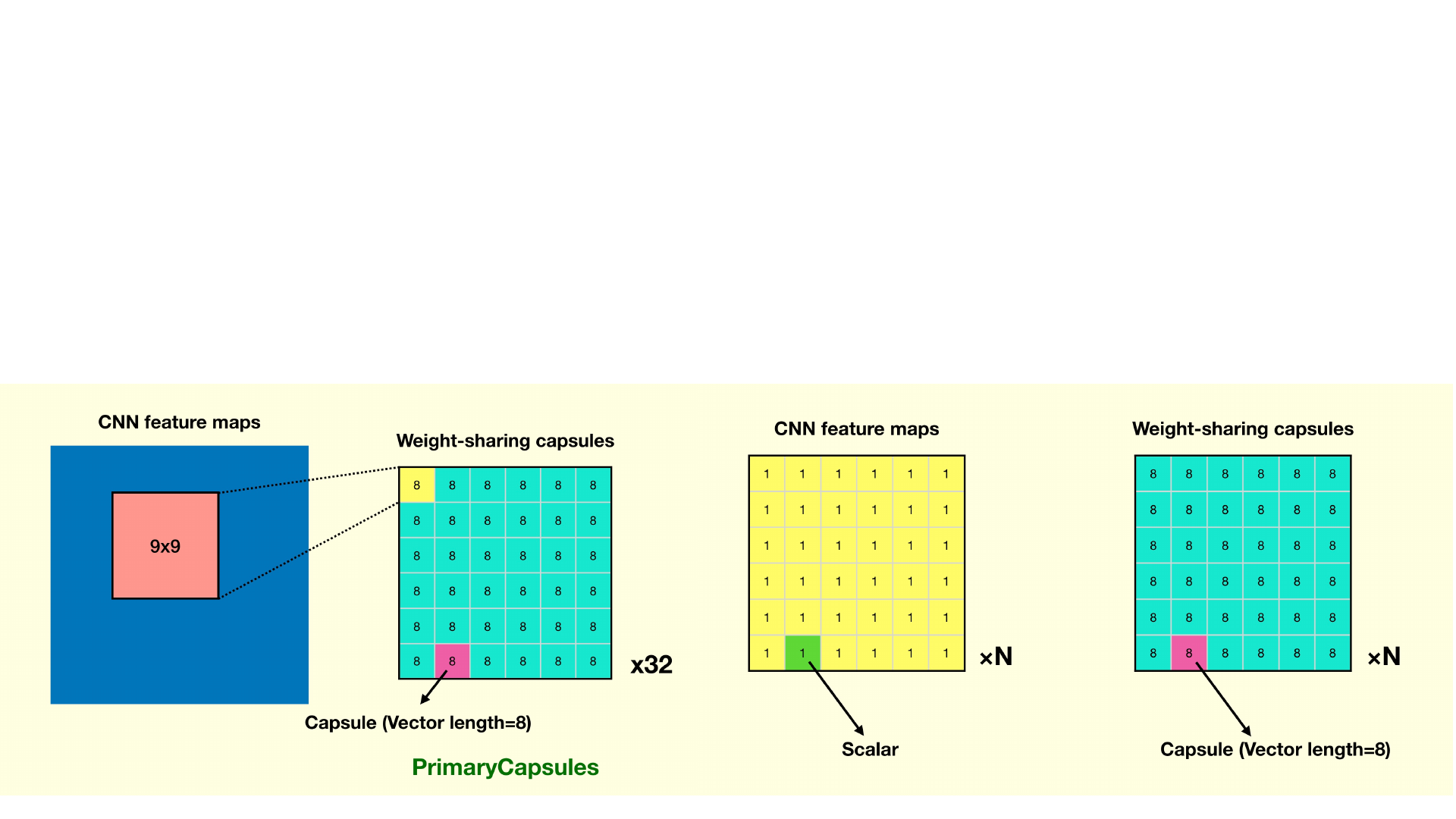

• PrimaryCapsules 胶囊层:相当于初级视皮层

• Input:20x20x256

• 9x9 kernel size, strides 2

• Output:6x6x8x32

• DigitCaps 输出层:相当于大脑判断结果

• 6x6x8,32 -routing-> 16x10

CapsNet

CapsNet

CapsNet

Input size

kernel size conv stride #Channels padding size activation pooling

28x28x1 9x9 1 256 [0,0,0,0] ReLU ×

L1. Conv 1 layer:Input:28x28x1, Output:20x20x256

i ∈ [1, 6x6x32],j ∈ [1, 10]

CapsNet

CapsNet

Input size

kernel size conv stride #Channels activation routing

20x20x256

9x9 2x2 32 Squashing ×

L2. PrimaryCaps layer:Input:20x20x256, Output:6x6x8x32

i ∈ [1, 6x6x32],j ∈ [1, 10]

CapsNet

CapsNet

Input size

kernel size #Channels activation routing

8x1 10 6x6x32 × √

L3. DigitCaps layer:Input:8x1, 6x6x32, Output:16x10

i ∈ [1, 6x6x32],j ∈ [1, 10]

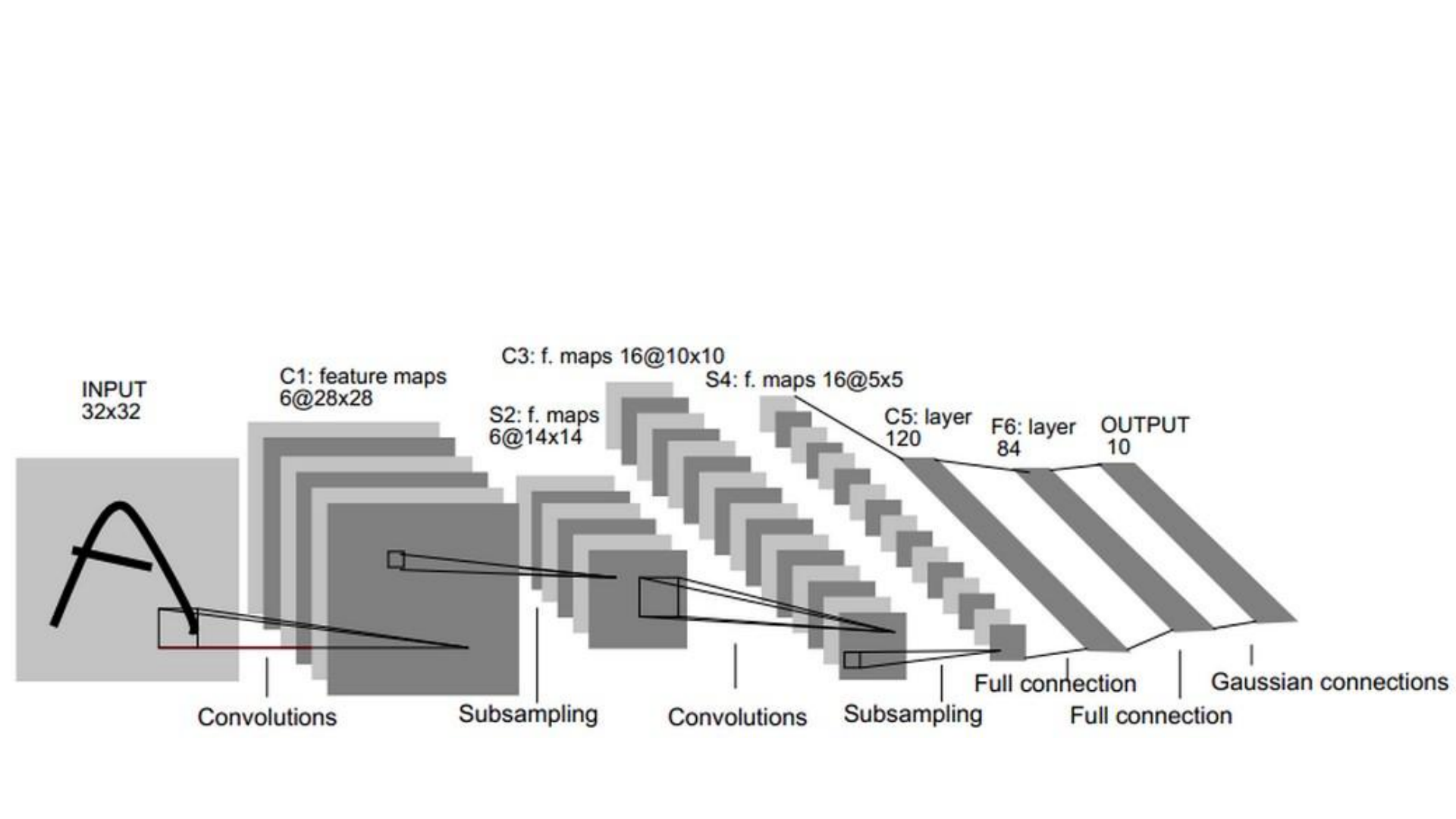

CapsNet

• LeNet

• 5x5 filter size, strides 1, 2x2 pool size

CapsNet

CapsNet

• CapsNet:CNN + Capsule

• 浅层的CNN用来抽取低级特征

• Capsule 的向量是用来表征某个物体的“实例”

CapsNet

CapsNet

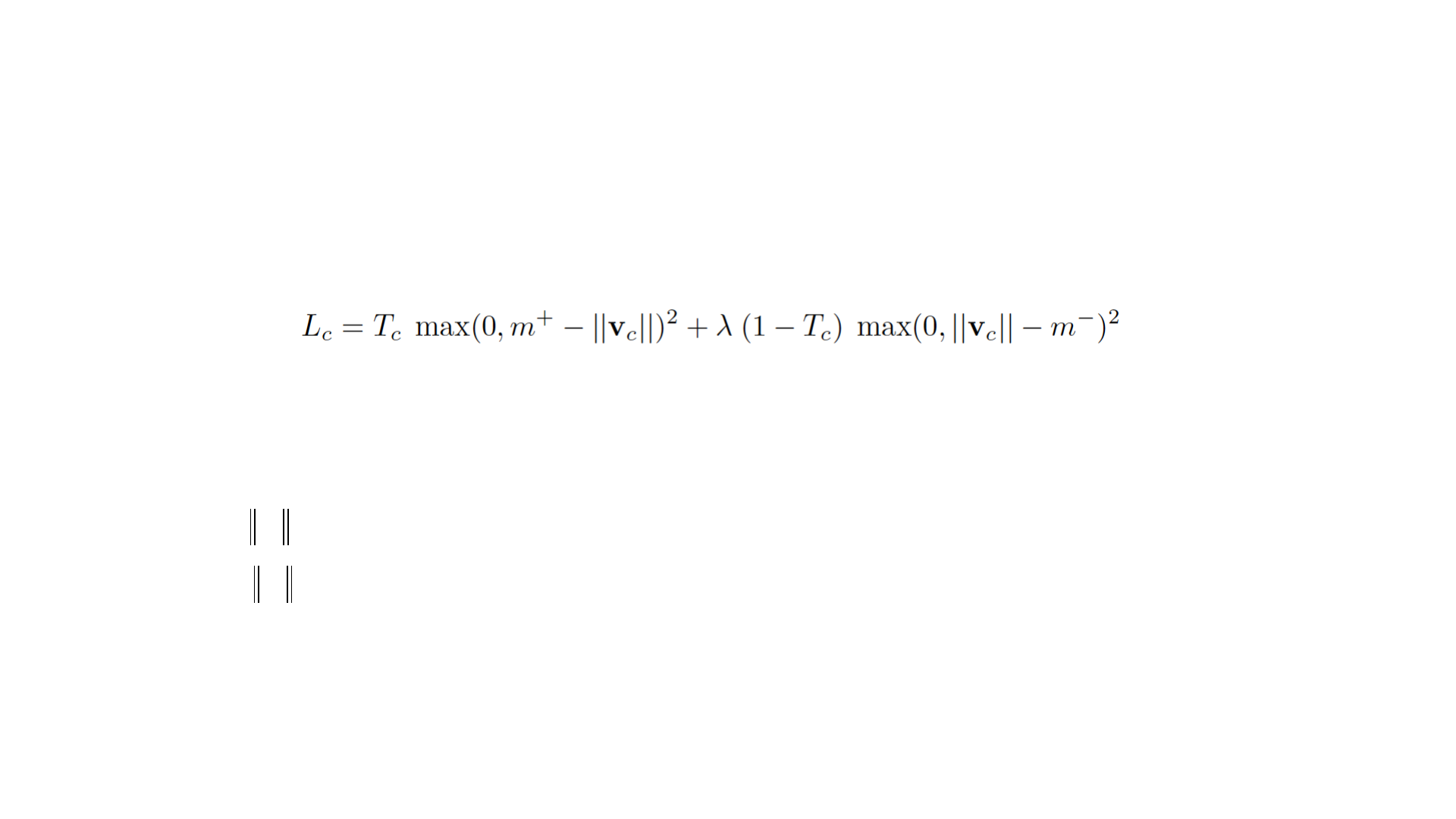

• SVM中常用的损失函数

CapsNet

:类别

:指示函数(分类c存在为1,否则为0)

: 上边界 ,避免假阴性,遗漏实际预测到存在的分类的情况

: 下边界,避免假阳性

margin loss:

c

T

m

+

m

-

c

c

v

c

v

å

c

c

L

CapsNet

• SVM中常用的损失函数

• 因为实例化向量的长度来表示 Capsule 要表征的实体是否存在,

所以当且仅当图片里出现属于类别 k 的手写数字时,我们希望类

别 k 的最顶层 Capsule 的输出向量长度很大(在论文 CapsNet 中

为 DigitCaps 层的输出)。

• 为了允许一张图里有多个数字,我们对每一个表征数字 k 的

Capsule 分别给出单独的 Margin loss。

CapsNet

Menu

• Capsule vs. Neuron

• Dynamic Routing vs. Attention

• CapsNet vs. Fully Connect CNN

• Reconstruction & Experiment

• Code

• Discussion

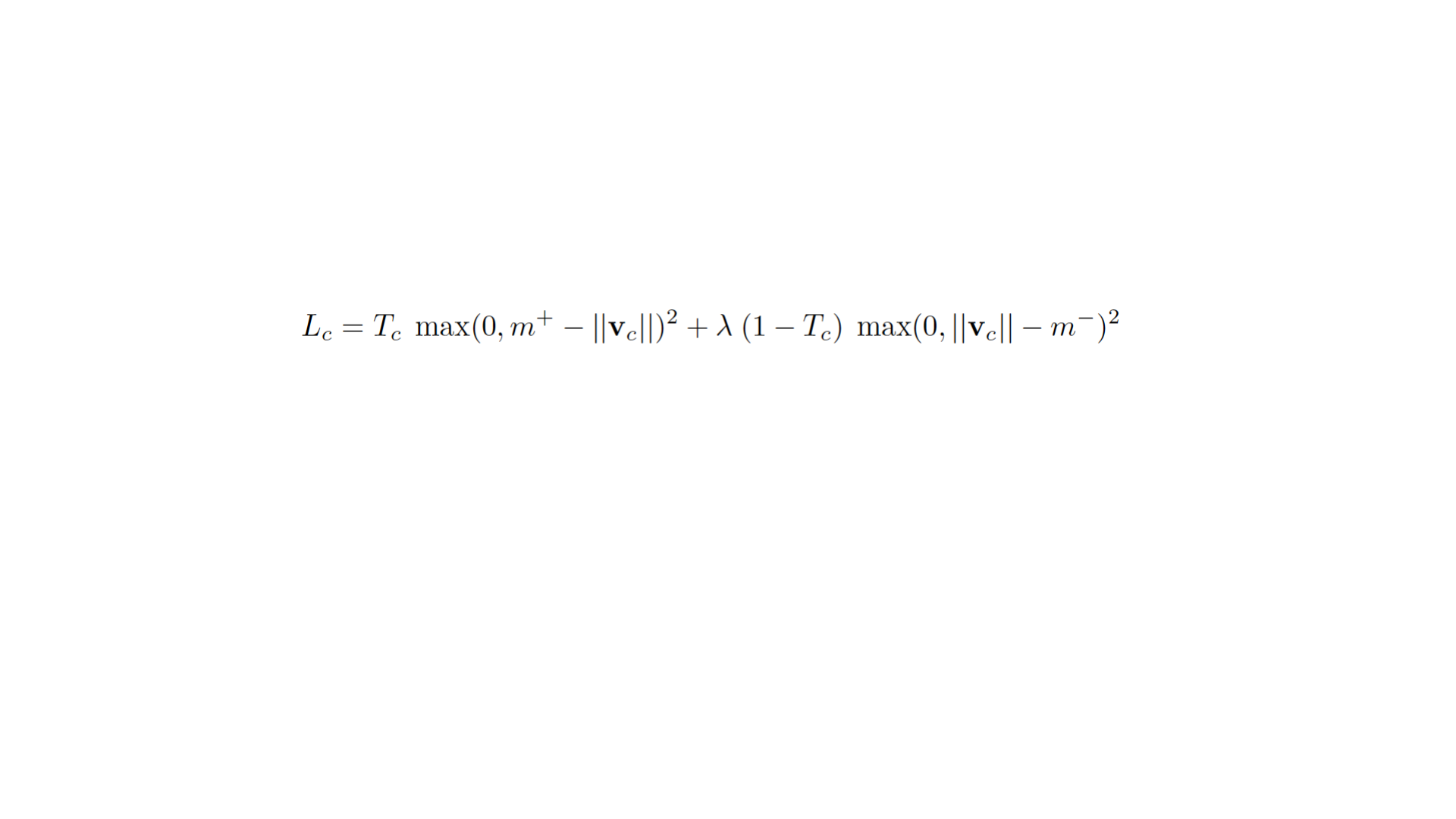

Reconstruction

• 通过重构校验重要假

设是每个 capsule 的向

量可以表征一个实例

Reconstruction & Experiment

Experiment

• 重构修正

• 多维扰动重构

Reconstruction & Experiment

Each row shows the

reconstruction when one of the

16 dimensions in the

DigitCaps representation is

tweaked by intervals of 0.05 in

the range [-0.25, 0.25]

---Hinton

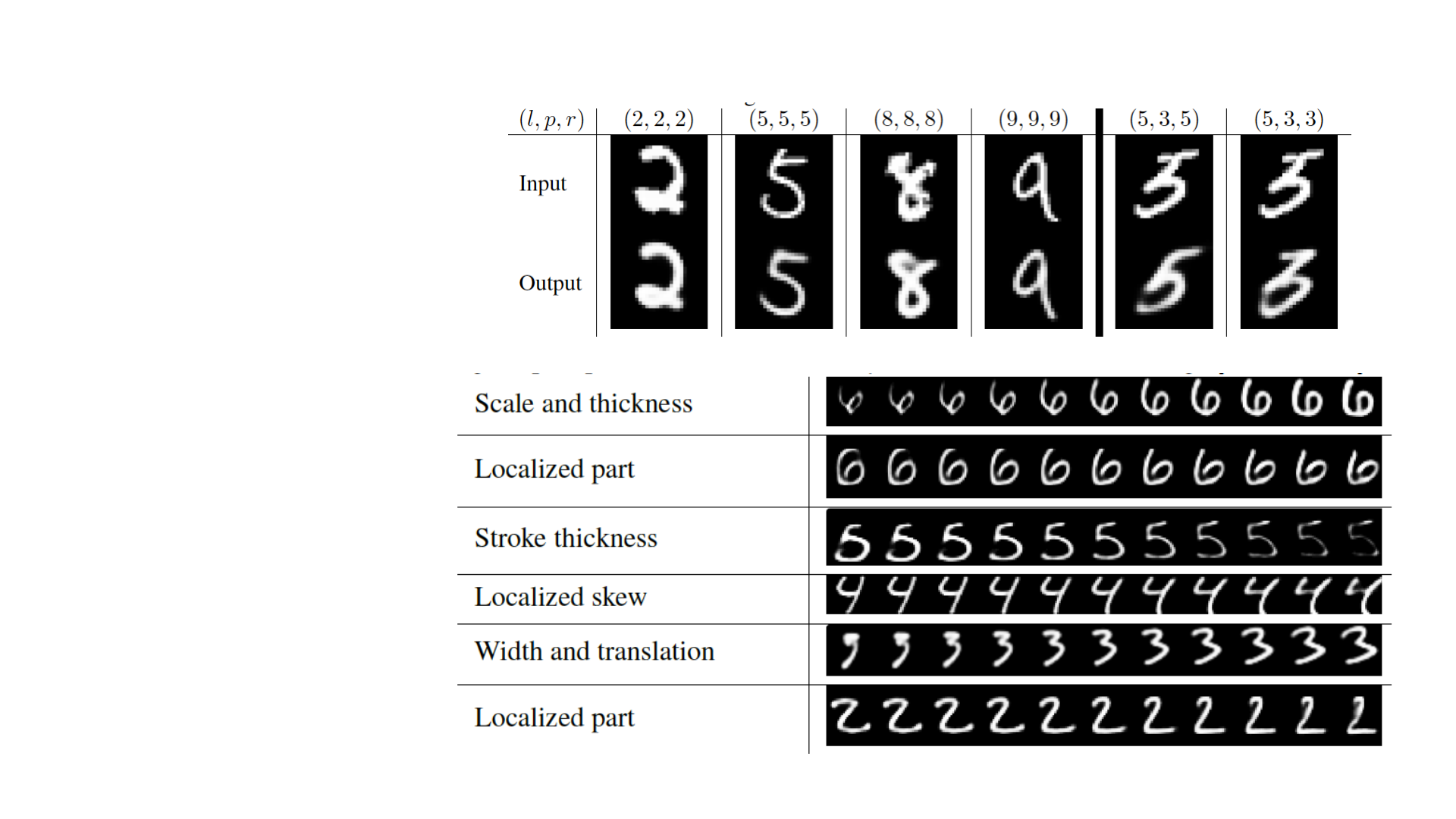

Experiment

• MultiMNIST

Reconstruction & Experiment

The two columns with the (*) mark

show reconstructions from a digit that is

neither the label nor the prediction.

These columns suggests that the model is

not just finding the best fit for all the

digits in the image including the ones

that do not exist.

-- Hinton

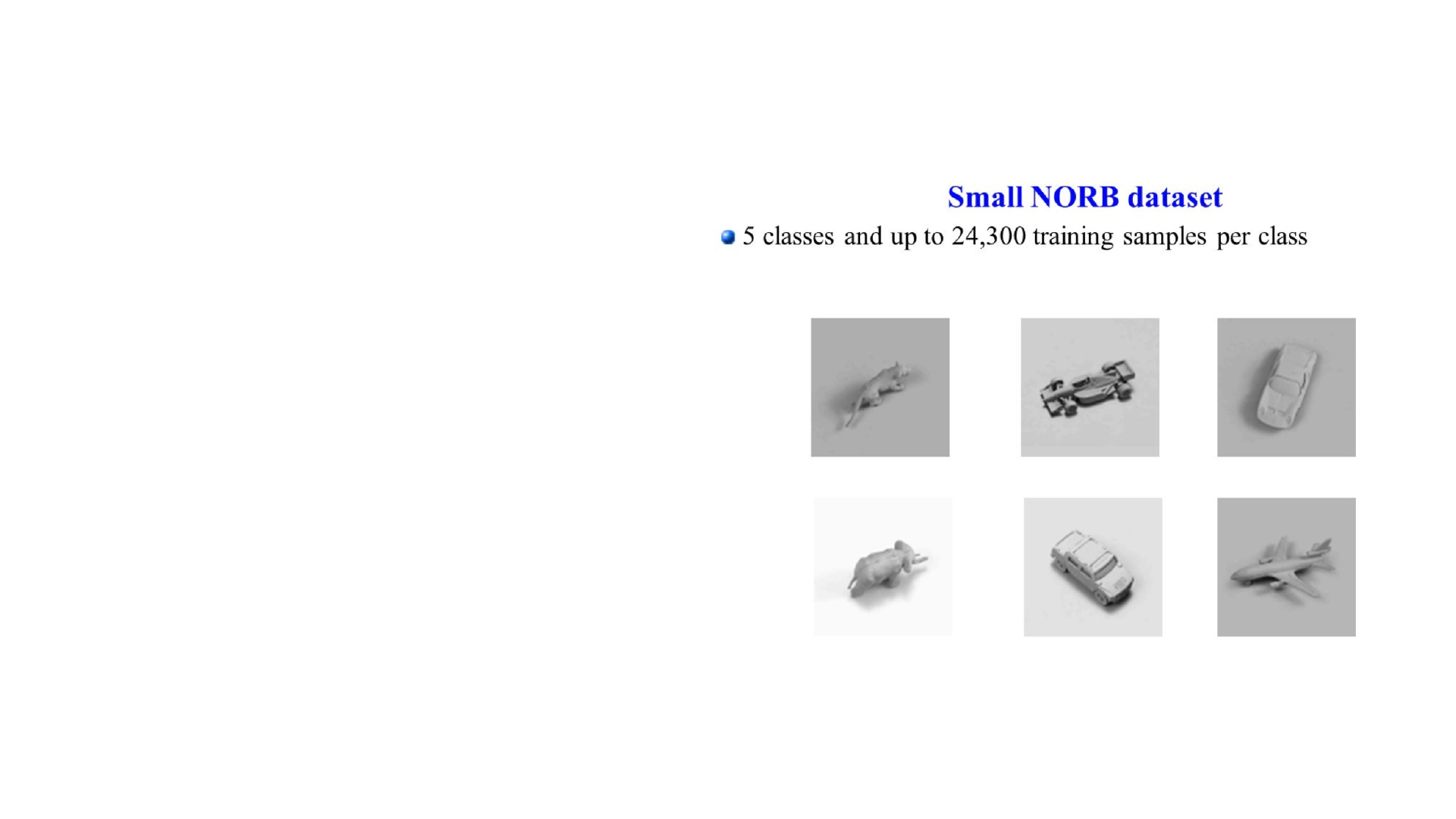

Experiment

• smallNORB – 3D

Reconstruction & Experiment

We also tested the exact same architecture as we used

for MNIST on smallNORB (LeCun et al. [2004]) and

achieved 2.7% test error rate, which is in-par with the

state-of-the-art (Cire¸san et al. [2011]).

-- Hinton

Routing Iterations

Reconstruction & Experiment

Menu

• Capsule vs. Neuron

• Dynamic Routing vs. Attention

• CapsNet vs. Fully Connect CNN

• Reconstruction

• Code

• Discussion

Implementations on GitHub

Code

TensorFlow

naturomics/CapsNet

-Tensorflow

Keras

XifengGuo/CapsNet

-Keras

PyTorch

nishnik/CapsNet

-PyTorch

timomernick/pytorch

-capsule

gram

-ai/capsule-networks

andreaazzini/capsnet.pytorch

leftthomas/CapsNet

MXNet

AaronLeong/CapsNet_Mxnet

Lasagne (Theano)

DeniskaMazur/CapsNet

-Lasagne

Chainer

soskek/dynamic_routing_between_capsules

Code Structure

• CapsLayer

• 通过定义类和对象的方式定义Capsule层级

• CapsNet

• 以下定义整个 CapsNet 的架构与正向传播过程

• Main

• 利用Net进行MNIST数据集的训练

Code

CapsNet

Code

1.# 以下定义整个 CapsNet 的架构与正向传播过程

2.class CapsNet():

3. def __init__(self, is_training=True):

4. self.graph = tf.Graph()

5. with self.graph.as_default():

6. if is_training:

7. # 获取一个批量的训练数据

8. self.X, self.Y = get_batch_data()

9. self.build_arch()

10. self.loss()

11. # t_vars = tf.trainable_variables()

12. self.optimizer = tf.train.AdamOptimizer()

13. self.global_step = tf.Variable(0, name='global_step', trainable=False)

14. self.train_op = self.optimizer.minimize(self.total_loss, global_step=self.global_step)

15. else:

16. self.X = tf.placeholder(tf.float32,

17. shape=(batch_size, 28, 28, 1))

18. self.build_arch()

19. tf.logging.info('Seting up the main structure')

20. # CapsNet 类中的build_arch方法能构建整个网络的架构

21. def build_arch(self):

22. pass

23. # 定义 CapsNet 的损失函数,损失函数一共分为衡量 CapsNet准确度的Margin loss

24. # 和衡量重构图像准确度的 Reconstruction loss

25. def loss(self):

26. pass

CapsNet

Code

1.# 以下定义整个 CapsNet 的架构与正向传播过程

2.class CapsNet():

3. def __init__(self, is_training=True):

4. pass

5. # CapsNet 类中的build_arch方法能构建整个网络的架构

6. def build_arch(self):

7. # 以下构建第一个常规卷积层

8. with tf.variable_scope('Conv1_layer'):

9. # 第一个卷积层的输出张量为: [batch_size, 20, 20, 256]

10. # 以下卷积输入图像X,采用256个9×9的卷积核,步幅为1,且不使用

11. conv1 = tf.contrib.layers.conv2d(self.X, num_outputs=256,

12. kernel_size=9, stride=1,

13. padding='VALID')

14. assert conv1.get_shape() == [batch_size, 20, 20, 256]

15. # 以下是原论文中PrimaryCaps层的构建过程,该层的输出维度为 [batch_size, 1152, 8, 1]

16. with tf.variable_scope('PrimaryCaps_layer'):

17. # 调用前面定义的CapLayer函数构建第二个卷积层,该过程相当于执行八次常规卷积,

18. # 然后将各对应位置的元素组合成一个长度为8的向量,这八次常规卷积都是采用32个9×9的卷积核、步幅为2

19. primaryCaps = CapsLayer(num_outputs=32, vec_len=8, with_routing=False, layer_type='CONV')

20. caps1 = primaryCaps(conv1, kernel_size=9, stride=2)

21. assert caps1.get_shape() == [batch_size, 1152, 8, 1]

22. # 以下构建 DigitCaps 层, 该层返回的张量维度为 [batch_size, 10, 16, 1]

23. with tf.variable_scope('DigitCaps_layer'):

24. # DigitCaps是最后一层,它返回对应10个类别的向量(每个有16个元素),该层的构建带有Routing过程

25. digitCaps = CapsLayer(num_outputs=10, vec_len=16, with_routing=True, layer_type='FC')

26. self.caps2 = digitCaps(caps1)

27. # 以下构建论文图2中的解码结构,即由16维向量重构出对应类别的整个图像

28. # 除了特定的 Capsule 输出向量,我们需要蒙住其它所有的输出向量

29. with tf.variable_scope('Masking'):

30. #mask_with_y是否用真实标签蒙住目标Capsule

31. mask_with_y=True

32. if mask_with_y:

33. self.masked_v = tf.matmul(tf.squeeze(self.caps2), tf.reshape(self.Y, (-1, 10, 1)), transpose_a=True)

34. self.v_length = tf.sqrt(tf.reduce_sum(tf.square(self.caps2), axis=2, keep_dims=True) + epsilon)

35. # 通过3个全连接层重构MNIST图像,这三个全连接层的神经元数分别为512、1024、784

36. # [batch_size, 1, 16, 1] => [batch_size, 16] => [batch_size, 512]

37. with tf.variable_scope('Decoder'):

38. vector_j = tf.reshape(self.masked_v, shape=(batch_size, -1))

39. fc1 = tf.contrib.layers.fully_connected(vector_j, num_outputs=512)

40. assert fc1.get_shape() == [batch_size, 512]

41. fc2 = tf.contrib.layers.fully_connected(fc1, num_outputs=1024)

42. assert fc2.get_shape() == [batch_size, 1024]

43. self.decoded = tf.contrib.layers.fully_connected(fc2, num_outputs=784, activation_fn=tf.sigmoid)

44. # 定义 CapsNet 的损失函数,损失函数一共分为衡量 CapsNet准确度的Margin loss

45. # 和衡量重构图像准确度的 Reconstruction loss

46. def loss(self):

47. pass

CapsNet

Code

1. # 定义 CapsNet 的损失函数,损失函数一共分为衡量 CapsNet准确度的Margin loss

2. # 和衡量重构图像准确度的 Reconstruction loss

3. def loss(self):

4. # 以下先定义重构损失,因为DigitCaps的输出向量长度就为某类别的概率,因此可以借助计算向量长度计算损失

5. # [batch_size, 10, 1, 1]

6. # max_l = max(0, m_plus-||v_c||)^2

7. max_l = tf.square(tf.maximum(0., m_plus - self.v_length))

8. # max_r = max(0, ||v_c||-m_minus)^2

9. max_r = tf.square(tf.maximum(0., self.v_length - m_minus))

10. assert max_l.get_shape() == [batch_size, 10, 1, 1]

11. # 将当前的维度[batch_size, 10, 1, 1] 转换为10个数字类别的one-hot编码 [batch_size, 10]

12. max_l = tf.reshape(max_l, shape=(batch_size, -1))

13. max_r = tf.reshape(max_r, shape=(batch_size, -1))

14. # 计算 T_c: [batch_size, 10],其为分类的指示函数

15. # 若令T_c = Y,那么对应元素相乘就是有类别相同才会有非零输出值,T_c 和 Y 都为One-hot编码

16. T_c = self.Y

17. # [batch_size, 10], 对应元素相乘并构建最后的Margin loss 函数

18. L_c = T_c * max_l + lambda_val * (1 - T_c) * max_r

19. self.margin_loss = tf.reduce_mean(tf.reduce_sum(L_c, axis=1))

20. # 以下构建reconstruction loss函数

21. # 这一过程的损失函数通过计算FC Sigmoid层的输出像素点与原始图像像素点间的欧几里德距离而构建

22. orgin = tf.reshape(self.X, shape=(batch_size, -1))

23. squared = tf.square(self.decoded - orgin)

24. self.reconstruction_err = tf.reduce_mean(squared)

25. # 构建总损失函数,Hinton论文将reconstruction loss乘上0.0005

26. # 以使它不会主导训练过程中的Margin loss

27. self.total_loss = self.margin_loss + 0.0005 * self.reconstruction_err

28. # 以下输出TensorBoard

29. tf.summary.scalar('margin_loss', self.margin_loss)

30. tf.summary.scalar('reconstruction_loss', self.reconstruction_err)

31. tf.summary.scalar('total_loss', self.total_loss)

32. recon_img = tf.reshape(self.decoded, shape=(batch_size, 28, 28, 1))

33. tf.summary.image('reconstruction_img', recon_img)

34. self.merged_sum = tf.summary.merge_all()

CapsLayer

Code

1.#通过定义类和对象的方式定义Capssule层级

2.class CapsLayer(object):

3. ''' Capsule layer 类别参数有:

4. Args:

5. input: 一个4维张量

6. num_outputs: 当前层的Capsule单元数量

7. vec_len: 一个Capsule输出向量的长度

8. layer_type: 选择'FC' 或 "CONV", 以确定是用全连接层还是卷积层

9. with_routing: 当前Capsule是否从较低层级中Routing而得出输出向量

10. Returns:

11. 一个四维张量

12. '''

13. def __init__(self, num_outputs, vec_len, with_routing=True, layer_type='FC'):

14. self.num_outputs = num_outputs

15. self.vec_len = vec_len

16. self.with_routing = with_routing

17. self.layer_type = layer_type

18. def __call__(self, input, kernel_size=None, stride=None):

19. '''

20. 当“Layer_type”选择的是“CONV”,我们将使用 'kernel_size' 和 'stride'

21. '''

22. # 开始构建卷积层

23. if self.layer_type == 'CONV': pass

24. if self.layer_type == 'FC': pass

CapsLayer

Code

1. def __call__(self, input, kernel_size=None, stride=None):

2. '''

3. 当“Layer_type”选择的是“CONV”,我们将使用 'kernel_size' 和 'stride'

4. '''

5. # 开始构建卷积层

6. if self.layer_type == 'CONV':

7. self.kernel_size = kernel_size

8. self.stride = stride

9. # PrimaryCaps层没有Routing过程

10. if not self.with_routing:

11. # 卷积层为 PrimaryCaps 层(CapsNet第二层), 并将第一层卷积的输出张量作为输入。

12. # 输入张量的维度为: [batch_size, 20, 20, 256]

13. assert input.get_shape() == [batch_size, 20, 20, 256]

14. #从CapsNet输出向量的每一个分量开始执行卷积,每个分量上执行带32个卷积核的9×9标准卷积

15. capsules = []

16. for i in range(self.vec_len):

17. # 所有Capsule的一个分量,其维度为: [batch_size, 6, 6, 32],即6×6×1×32

18. with tf.variable_scope('ConvUnit_' + str(i)):

19. caps_i = tf.contrib.layers.conv2d(input, self.num_outputs,

20. self.kernel_size, self.stride,

21. padding="VALID")

22. # 将一般卷积的结果张量拉平,并为添加到列表中

23. caps_i = tf.reshape(caps_i, shape=(batch_size, -1, 1, 1))

24. capsules.append(caps_i)

25. # 为将卷积后张量各个分量合并为向量做准备

26. assert capsules[0].get_shape() == [batch_size, 1152, 1, 1]

27. # 合并为PrimaryCaps的输出张量,即6×6×32个长度为8的向量,合并后的维度为 [batch_size, 1152, 8, 1]

28. capsules = tf.concat(capsules, axis=2)

29. # 将每个Capsule 向量投入非线性函数squash进行缩放与激活

30. capsules = squash(capsules)

31. assert capsules.get_shape() == [batch_size, 1152, 8, 1]

32. return(capsules)

33. if self.layer_type == 'FC':

34. # DigitCaps 带有Routing过程

35. if self.with_routing:

36. # CapsNet 的第三层 DigitCaps 层是一个全连接网络

37. # 将输入张量重建为 [batch_size, 1152, 1, 8, 1]

38. self.input = tf.reshape(input, shape=(batch_size, -1, 1, input.shape[-2].value, 1))

39. with tf.variable_scope('routing'):

40. # 初始化b_IJ的值为零,且维度满足: [1, 1, num_caps_l, num_caps_l_plus_1, 1]

41. b_IJ = tf.constant(np.zeros([1, input.shape[1].value, self.num_outputs, 1, 1], dtype=np.float32))

42. # 使用定义的Routing过程计算权值更新与s_j

43. capsules = routing(self.input, b_IJ)

44. #将s_j投入 squeeze 函数以得出 DigitCaps 层的输出向量

45. capsules = tf.squeeze(capsules, axis=1)

46. return(capsules)

Menu

• Capsule vs. Neuron

• Dynamic Routing vs. Attention

• CapsNet vs. Fully Connect CNN

• Reconstruction

• Code

• Discussion

Discussion

• 贡献:

• Capsule:

• 神经元从scalar in , scalar out到vector in, vector out的转变,向量输出相比

标量输出具有更大的表示空间

• 对实体概念的封装,更接近客观世界的抽象

• Dynamic Routing:

• Dynamic

• 自底向上的选择性激活

Discussion

There are many possible ways to implement the general idea of capsules. The aim of this

paper is not to explore this whole space but to simply show that one fairly straightforward

implementation works well and that dynamic routing helps. --- Hinton

Discussion

• 可能改进的地方:

• squash函数:满足非线性、把向量压缩至L2范数在[0,1]间

• Routing过程:聚类、EM Routing等

• Capsule版卷积层:解决参数过多问题

• 可能适用的场景:

• 表征学习方向

• GAN

• 视频处理等

Discussion

Reference

• https://arxiv.org/pdf/1710.09829.pdf

• https://github.com/naturomics/CapsNet-Tensorflow

• https://github.com/XifengGuo/CapsNet-Keras

• https://hackernoon.com/what-is-a-capsnet-or-capsule-network-

2bfbe48769cc

• https://www.zhihu.com/question/67287444/answer/251460831

• https://openreview.net/pdf?id=HJWLfGWRb